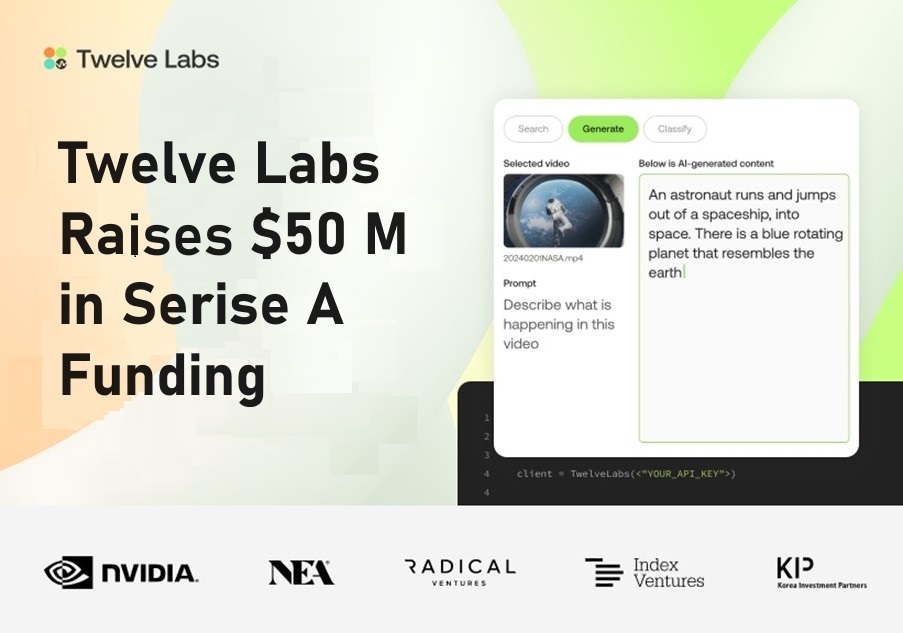

Twelve Labs, an AI development giant, has raised a Series A round of funding worth approximately $50 million.

Since its founding in 2021, Twelve Labs has been building its multimodal neural network technology to anticipate the potential of the video domain. In March, the company released Pegasus-1, a super-scale AI video language generation model, and Marengo 2.6, a multimodal video understanding model, which demonstrated a performance advantage of up to 43% over commercial and open source video language models from Google, OpenAI and others, making it uniquely competitive in video understanding technology. Twelve Labs’ multi-year cloud partnership with Oracle gave the company early access to thousands of clusters of the latest NVIDIA graphics processing units (GPUs), including H100s, to develop the world’s first baseline model for multimodal image understanding.

Twelve Labs’ Series A round was led by New Enterprise Associates (NEA) and NVentures, a subsidiary of NVIDIA, with participation from a number of other leading global investors, including Index Ventures, Radical Ventures, DreamWorks founder Jeffrey Katzenberg’s WndrCo, and Korea Investment Partners. This brings the cumulative investment in Twelve Labs to approximately $77 million (approx. KRW 106 billion).

The majority of the investors who participated in the strategic investment in October last year continued to invest in the Series A, with NEA joining as a new investor. The investors were attracted to Twelve Labs by its unique multimodal neural network technology, its understanding of the imaging market and its talent. NEA is a leading global investor with more than $25 billion under management and has previously invested in Perplexity AI, Databricks and Coursera, among others. Notably, NEA partner Tiffany Luck has also joined Twelve Labs as a new board member.

Twelve Labs will use the investment to release updated versions of its Pegasus-1 and Marengo 2.6 models on a monthly basis. The company will also be hiring aggressively across the board. In the US, Twelve Labs is already attracting top talent from global tech giants such as Microsoft, Meta, NVIDIA, Intel and Cohere, and in Korea, Twelve Labs plans to actively recruit talented AI and machine learning researchers and developers. The company is currently working with NVIDIA to improve the performance of Tensor RT-LLM, which specialises in existing language models, to enhance the ability of multimodal neural networks to learn, and plans to take the lead in the field of multimodal image understanding.

Multimodal image understanding is a key component of generative AI,” said Mohamed Siddeek, NVIDIA vice president and head of NVentures. With Twelve Labs’ outstanding image understanding technology and NVIDIA’s accelerated computing, we look forward to continuing our research collaboration to help enterprise customers. John MJ Kim, Principal of Korea Investment Partners, said, “While the LLM market is in a “league of its own” with OpenAI and other major tech companies, we believe Twelve Labs has the potential to become a global leader in the multimodal image understanding AI market, which is why we participated in this Series A following our strategic investment last year.

We currently have more than 30,000 users of our APIs, and earlier this year we began partnering with global media asset management platforms such as Vidispine, EMAM and Blackbird,” said Jae-sung Lee, CEO of Twelve Labs. With this investment, we will accelerate the development and advancement of our video understanding models and expand our enterprise business to enable our APIs to be used in industries such as sports, media, advertising and security.

Leave a Comment